I just read a new research paper that concluded that the European Energy Label had no discernible effect on the willingness to pay for efficient products, while providing information on total cost of ownership did. The only explanation provided for why that might be the case was that the range of efficiency classes for products currently sold on the EU market is narrow – but this was just a guess, and says nothing about the decision-making process or why total cost of ownership was more compelling.

At Enervee, we’ve been conducting research – even before the company was launched – to understand how people decide what to buy. This body of knowledge, and the behavioral science literature and insights that guide our own research, has informed the design of our award-winning consumer-facing utility online Choice Engines, which operate on the basis of behavioral nudges (and perhaps boosts – we’re still thinking about that).

The art & science of evaluating influence on behavior

…which leads us to believe that the latest thinking on how people decide what to buy should also flow into the evaluation of energy efficiency programs that are designed to nudge buying behavior – which is a very different endeavor than programs that operate with monetary incentives.

The key question that needs to be answered by any impact evaluation is: How much energy savings were achieved as a result of the program?

Some behavioral programs get at this question by comparing energy bill data for a participant and a control group, both randomly selected. The difference in energy bills is then attributed 100% to the behavioral program. But this random assignment approach doesn’t work for Enervee’s Choice Engine platform, which utilities make available to all of their customers, with no requirement to be logged into an account to use them. Therefore, evaluators rely on a mix of platform analytics (e.g., how many unique visitors access the site) and user surveys to determine how many efficient product purchases (and energy and greenhouse gas savings) are achieved by the platform.

Survey responses are commonly used in the efficiency evaluation industry to calculate so-called “net-to-gross” (NTG) ratios that are multiplied by the total efficient purchases made by active visitors to our platform to estimate those purchases that were made as a result of the platform. What we need to understand is whether the Choice Engine platform nudged a visitor to a more efficient purchase than they otherwise would have made, had they not visited the site.

Pros & cons of the status quo approach

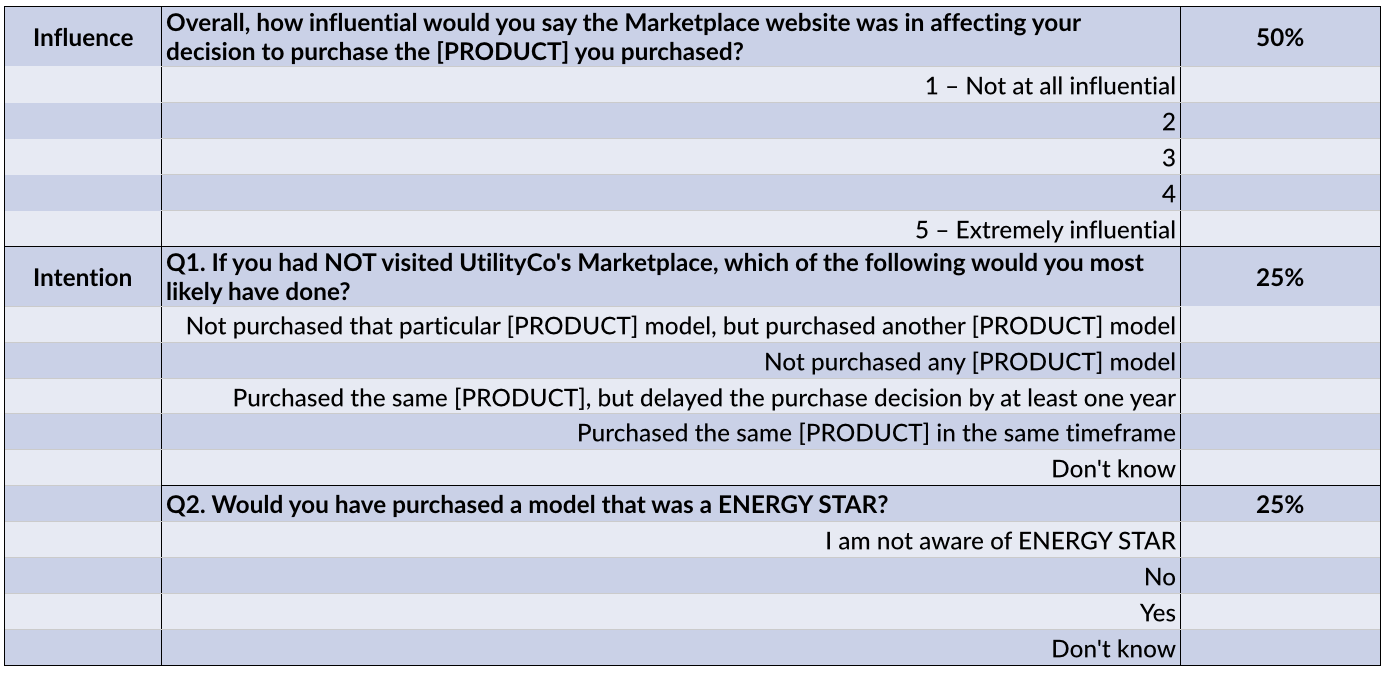

The customer self-report survey approach is applied almost universally across the country, and can be a cost-effective, transparent and flexible method for estimating NTG. Evaluators typically try to assess both program influence and intention (stated intent in absence of the program) to come up with a composite net-to-gross adjustment factor, usually with a weighting of 50:50 for influence and intention.

Despite the fact that self-report surveys are widely used and considered standard practice, they also have recognized flaws that tend to over-estimate free-ridership, for example:

- Consumers’ inability to know what they would have done in a hypothetical alternative situation

- Tendency to rationalize past choices

- Consumers may fail to recognize the influence the program (particularly relevant for Enervee’s Choice Engine, which relies on behavioral insights to change the choice architecture, which visitors would not be aware of)

- Potential biases related to respondents’ giving “socially desirable” answers (people do not like to admit that their decisions are not sovereign).

In addition, we were unable to identify any studies in the academic literature that validated an “influence” scale (influence is typically assessed based on behavioral responses).

Asking about whether someone would have bought an ENERGY STAR product, had it not been for the Choice Engine, is also problematic, for a number of reasons:

- Social desirability bias (it’s good to buy ENERGY STAR, and the label has very high levels of consumer recognition)

- The market share of ENERGY STAR products varies widely by product category (5% or less for CFLs and heat pump water heaters and over 90% for LCD monitors and dishwashers). So responses to this question do not clarify whether someone would have bought an efficient product in the counterfactual (not all certified products are efficient)

- Our rigorous randomized controlled trial (RCT) experiments have documented a significant effect of the Enervee Score on top of the presence of the ENERGY STAR label. So, even if a consumer intended to buy something efficient, using the Enervee Choice Engine may result in an even more efficient purchase

Finally, the proposed scales for NTG adjustments — while common practice — are largely arbitrary, with no certainty that responses to the “intention” (or “influence”) questions reflect with and without Choice Engine buying behaviors.

Linking self-reported usefulness to the efficiency of product choices

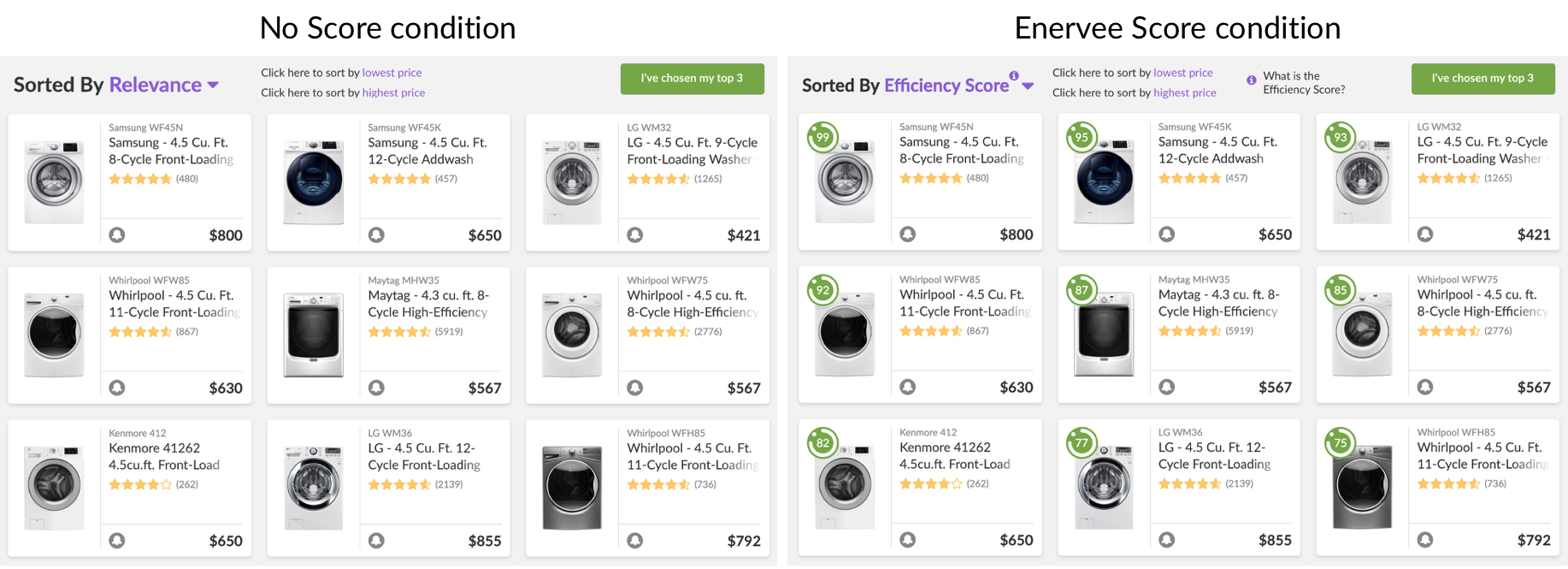

This is why Enervee – or, to be more precise, my colleague and behavioral scientist, Guy Champniss – recently undertook some influence research. The same participants were presented with an influence survey and asked to participate in a randomized controlled trial experiment. This allowed us to see how well the self-report measures we tested stacked up against product preferences under two conditions, a regular shopping platform and our platform with its modified choice architecture, featuring critical energy information proven to nudge more efficient choices.

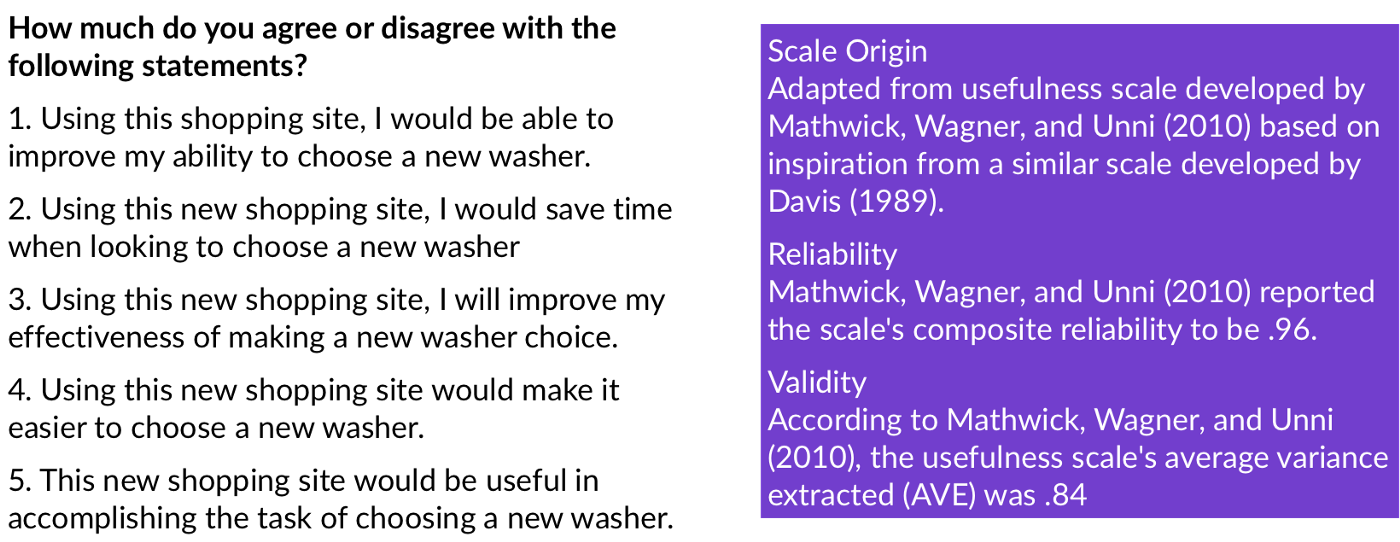

We tested a single question about how influential the online site was (see chart above) against a battery of five questions about how useful the site was (in terms of time savings, effectiveness, ability, and ease of choosing a new washer).

And the hands down winner was the battery of “usefulness” questions:

- Consumers can readily judge if something was useful to them or not

- Usefulness does not come with the same social desirability bias as questions about “influence” or “ENERGY STAR”

- The battery of questions on which our modified set were based have been documented in the academic literature as being reliable

- RCT experiments confirmed that responses on the “usefulness” battery were significantly higher for the “Enervee Score” condition than for the “no Score” condition (significant at the 2% level). This relationship was more than twice as strong as for the “influence” question (which was just barely significant at the 5% level).

- There is a strong correlation between responses to the “usefulness” battery and the Enervee Score (proxy for energy efficiency) of products chosen, while there was no significant correlation with the responses to the “influence” question. This relationship can be used to establish an analytical basis for estimating NTG.

Usefulness therefore appears to be a valid proxy for platform influence on the efficiency of products chosen.

Understanding buying decisions to evaluate influence

It‘s important to recall that over 60% of consumers nationwide (with numbers above 80% in California and Texas) think it’s important to buy efficient products for their homes, but face barriers in following through on their ambitions (such as lack of market transparency with respect to product efficiency and intuition bias that efficient products necessarily cost more).

Enervee’s Choice Engines are designed to change the choice architecture (provide a heuristic, visual cue to support intuitive evaluation, and a personalized savings/total cost calculator to support reflective, analytical decisions, as well as addressing the lay theory that efficient = expensive), such that consumers will make more energy efficient choices. Previous independent studies found that visitors rate these features highly in terms of their usefulness in facilitating their shopping experience. The goal is to nudge all consumers towards better choices, even if they didn’t intend to buy an efficient product. In research we did on Democrats and Republicans, we found that the Enervee Score worked equally well in both cases – but perhaps in different ways.

So, when it comes to assessing the impact of choice engine platforms, our modified “usefulness” measure – which is well correlated with RCTs that reveal actual preferences with and without platform features – is likely more reliable than responses to counterfactual questions designed to tease out conscious intent. Considering their behavioral science underpinnings (program theory and logic) can support more meaningful evaluations of behavioral interventions.

Had Tina Turner been a behavioral psychologist back in 1984, she may have sung:

What’s intention got to do, got to do with it?

What’s intention, but a second hand emotion?

What’s intention got to do, got to do with it?

Who needs a brain when a brain can be nudged?

And that slight change in lyrics might have just been enough to win that elusive American Music Award for Favorite Song…